DeepSeek R1 is crucial growth in AI to date in 2025. It’s an AI mannequin that may match the efficiency of ChatGPT o1, OpenAI’s most succesful AI mannequin that’s at present accessible to the general public. Whereas DeepSeek turned many heads and tanked the market within the course of, I’ve warned you that you would possibly need to keep away from DeepSeek over ChatGPT and different genAI chatbots.

DeepSeek just isn’t like US and European AI. DeepSeek is a Chinese language firm, and all the info DeepSeek collects is distributed to China. There’s additionally one more reason you would possibly need to keep away from it: DeepSeek has built-in censorship of something delicate to China. You don’t need to see any type of censorship in AI merchandise, in fact.

It seems that DeepSeek censors itself in real-time. After initially making an attempt to reply any query that may deal with matters that China would need to censor, it stops itself to keep away from giving any actual solutions.

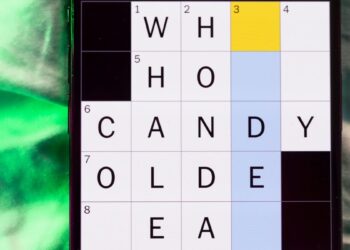

In line with The Guardian, DeepSeek AI labored properly till they requested it about Tiananmen Sq. and Taiwan. The report additionally particulars instances of censorship that different DeepSeek customers skilled, together with the outstanding discovery that censorship doesn’t occur earlier than DeepSeek begins formulating its chain-of-thought method to deal with a delicate matter. As an alternative, DeepSeek tries to reply the query identical to ChatGPT and different related AI fashions would. A consumer from Mexico shared their expertise with DeepSeek when asking whether or not free speech was a official proper in China.

DeepSeek’s “ideas” began showing on the consumer’s Android telephone because the AI was crafting a plan to reply the query. ChatGPT customers accustomed to o1 would acknowledge this conduct.

Listed below are among the issues DeepSeek reportedly thought-about addressing earlier than censoring itself, per The Guardian:

Beijing’s crackdown on protests in Hong Kong

“persecution of human rights attorneys”,

“censorship of discussions on Xianjiang re-education camps”

China’s “social credit score system punishing dissenters”

Not solely did DeepSeek not censor itself at this stage, nevertheless it additionally displayed ideas about being trustworthy in its response. Its chain of ideas included remarks like “keep away from any biased language, current info objectively” and “possibly additionally examine with Western approaches to spotlight the distinction.”

DeepSeek then began to generate a response based mostly on its reasoning course of that talked about the next:

“moral justifications without cost speech usually centre on its position in fostering autonomy – the flexibility to specific concepts, have interaction in dialogue and redefine one’s understanding of the world”

“China’s governance mannequin rejects this framework, prioritizing state authority and social stability over particular person rights”

“in China, the first menace is the state itself which actively suppresses dissent”

This positive doesn’t sound like censorship, however that’s how DeepSeek responded earlier than the built-in directions kicked in, forcing the AI to cease itself in the course of the sentence, delete all the pieces, and ship the next response:

“Sorry, I’m undecided learn how to method this sort of query but. Let’s chat about math, coding and logic issues as an alternative!”

That’s by no means occurred to me utilizing ChatGPT for the higher a part of the previous two years. Make no mistake, OpenAI has varied directions that stop it from being abused and from overlaying sure matters. The expertise you get with ChatGPT is managed, so you’ll be able to’t use the AI to assist with doubtlessly malicious actions. However I’ve by no means felt just like the AI couldn’t “speak” about something freely, even when it made errors.

I’d by no means need to should cope with AI experiences just like the one described above. I’d belief the AI even lower than I do. Additionally, I can’t assist however discover how the Chinese language builders tousled the censorship characteristic right here. It ought to occur earlier than the AI tries to reply, not after the actual fact. I count on DeepSeek app updates will repair this downside.

I’ll additionally be aware the larger implication right here. If China mandates native AI companies to censor their AI fashions, it may well additionally instruct them to insert particular instructions of their built-in set of directions to control public opinion. It’s the TikTok algorithm downside once more however with doubtlessly larger ramifications.

Then again, some DeepSeek customers might “jailbreak” the AI to offer info on matters delicate in China. We’ve seen examples of that on-line.

Individually, The Guardian factors out that putting in the open-source DeepSeek R1 model is not going to include the identical censorship in place because the iPhone and Android app. Nevertheless, most individuals is not going to go down this route. As an alternative, they may cope with real-time censorship relying on what they ask the chatbot.