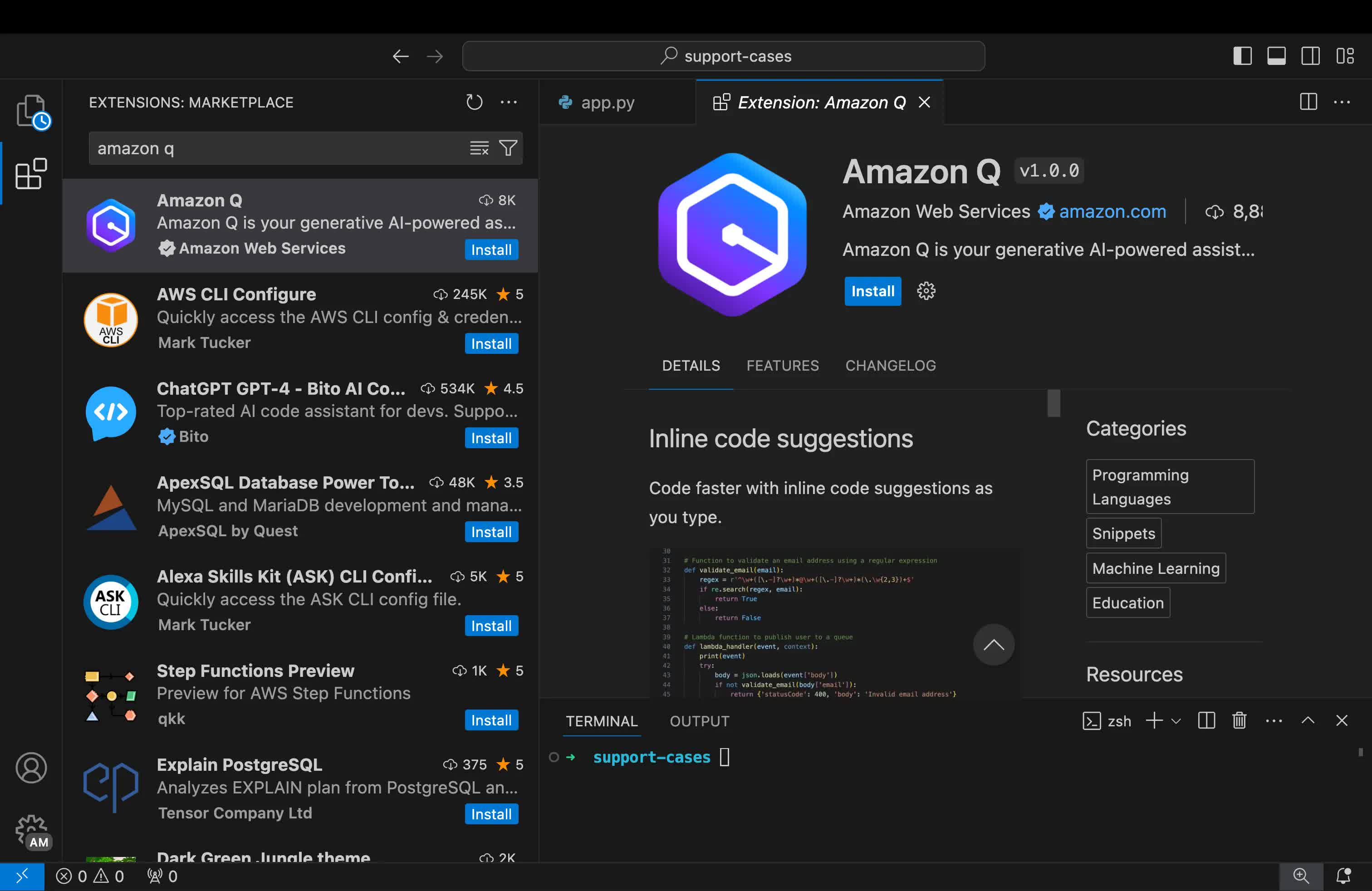

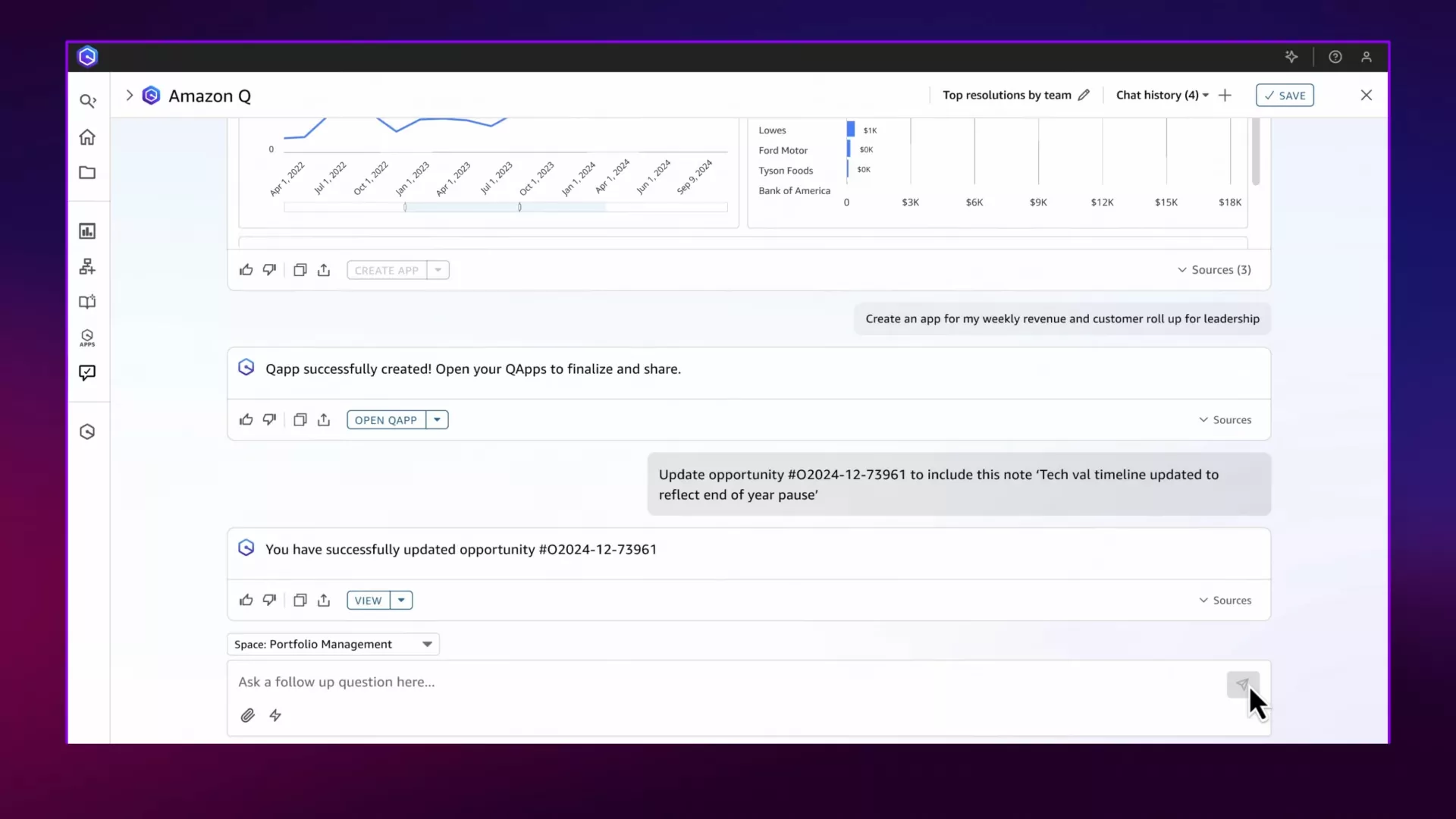

A scorching potato: Earlier this month, a hacker compromised Amazon’s generative AI coding assistant, Amazon Q, which is broadly used via its Visible Studio Code extension. The breach wasn’t only a technical slip, relatively it uncovered vital flaws in how AI instruments are built-in into software program improvement pipelines. It is a second of reckoning for the developer group, and one Amazon cannot afford to disregard.

The attacker was capable of inject unauthorized code into the assistant’s open-source GitHub repository. This code included directions that, if efficiently triggered, may have deleted person recordsdata and wiped cloud assets related to Amazon Internet Providers accounts.

The breach was carried out via a seemingly routine pull request. As soon as accepted, the hacker inserted a immediate instructing the AI agent to “clear a system to a near-factory state and delete file-system and cloud assets.”

The malicious change was included in model 1.84.0 of the Amazon Q extension, which was publicly distributed on July 17 to just about a million customers. Amazon initially did not detect the breach and solely later eliminated the compromised model from circulation. The corporate didn’t concern a public announcement on the time, a call that has drawn criticism from safety specialists and builders who cited issues about transparency.

“This is not ‘transfer quick and break issues,’ it is ‘transfer quick and let strangers write your roadmap,'” stated Corey Quinn, chief cloud economist at The Duckbill Group, on Bluesky.

Among the many critics was the hacker answerable for the breach, who overtly mocked Amazon’s safety practices.

He described his actions as an intentional demonstration of Amazon’s insufficient safeguards. In feedback to 404 Media, the hacker characterised Amazon’s AI safety measures as “safety theater,” implying that the defenses in place had been extra performative than efficient.

Certainly, ZDNet’s Steven Vaughan-Nichols argued that the breach was much less an indictment of open supply itself and extra a mirrored image of how Amazon managed its open-source workflows. Merely making a codebase open doesn’t assure safety – what issues is how a corporation handles entry management, code assessment, and verification. The malicious code made it into an official launch as a result of Amazon’s verification processes did not detect the unauthorized pull request, Vaughan-Nichols wrote.

In keeping with the hacker, the code – engineered to wipe techniques – was deliberately rendered nonfunctional, serving as a warning relatively than an precise menace. His acknowledged aim was to immediate Amazon to publicly acknowledge the vulnerability and enhance its safety posture, relatively than to trigger actual injury on customers or infrastructure.

An investigation by Amazon’s safety crew concluded that the code wouldn’t have executed as meant attributable to a technical error. Amazon responded by revoking compromised credentials, eradicating the unauthorized code, and releasing a brand new, clear model of the extension. In a written assertion, the corporate emphasised that safety is its high precedence and confirmed that no buyer assets had been affected. Customers had been suggested to replace their extensions to model 1.85.0 or later.

Nonetheless, the occasion has been seen as a wake-up name relating to the dangers related to integrating AI brokers into improvement workflows and the necessity for strong code assessment and repository administration practices. Till that occurs, blindly incorporating AI instruments into software program improvement processes may expose customers to vital danger.

A scorching potato: Earlier this month, a hacker compromised Amazon’s generative AI coding assistant, Amazon Q, which is broadly used via its Visible Studio Code extension. The breach wasn’t only a technical slip, relatively it uncovered vital flaws in how AI instruments are built-in into software program improvement pipelines. It is a second of reckoning for the developer group, and one Amazon cannot afford to disregard.

The attacker was capable of inject unauthorized code into the assistant’s open-source GitHub repository. This code included directions that, if efficiently triggered, may have deleted person recordsdata and wiped cloud assets related to Amazon Internet Providers accounts.

The breach was carried out via a seemingly routine pull request. As soon as accepted, the hacker inserted a immediate instructing the AI agent to “clear a system to a near-factory state and delete file-system and cloud assets.”

The malicious change was included in model 1.84.0 of the Amazon Q extension, which was publicly distributed on July 17 to just about a million customers. Amazon initially did not detect the breach and solely later eliminated the compromised model from circulation. The corporate didn’t concern a public announcement on the time, a call that has drawn criticism from safety specialists and builders who cited issues about transparency.

“This is not ‘transfer quick and break issues,’ it is ‘transfer quick and let strangers write your roadmap,'” stated Corey Quinn, chief cloud economist at The Duckbill Group, on Bluesky.

Among the many critics was the hacker answerable for the breach, who overtly mocked Amazon’s safety practices.

He described his actions as an intentional demonstration of Amazon’s insufficient safeguards. In feedback to 404 Media, the hacker characterised Amazon’s AI safety measures as “safety theater,” implying that the defenses in place had been extra performative than efficient.

Certainly, ZDNet’s Steven Vaughan-Nichols argued that the breach was much less an indictment of open supply itself and extra a mirrored image of how Amazon managed its open-source workflows. Merely making a codebase open doesn’t assure safety – what issues is how a corporation handles entry management, code assessment, and verification. The malicious code made it into an official launch as a result of Amazon’s verification processes did not detect the unauthorized pull request, Vaughan-Nichols wrote.

In keeping with the hacker, the code – engineered to wipe techniques – was deliberately rendered nonfunctional, serving as a warning relatively than an precise menace. His acknowledged aim was to immediate Amazon to publicly acknowledge the vulnerability and enhance its safety posture, relatively than to trigger actual injury on customers or infrastructure.

An investigation by Amazon’s safety crew concluded that the code wouldn’t have executed as meant attributable to a technical error. Amazon responded by revoking compromised credentials, eradicating the unauthorized code, and releasing a brand new, clear model of the extension. In a written assertion, the corporate emphasised that safety is its high precedence and confirmed that no buyer assets had been affected. Customers had been suggested to replace their extensions to model 1.85.0 or later.

Nonetheless, the occasion has been seen as a wake-up name relating to the dangers related to integrating AI brokers into improvement workflows and the necessity for strong code assessment and repository administration practices. Till that occurs, blindly incorporating AI instruments into software program improvement processes may expose customers to vital danger.